By Mayank Kumar Singh, Senior Engineer at Sony Research India

In this blog, Mayank Kumar Singh breaks down the the paper titled ‘Iteratively Improving Speech Recognition and Voice Conversion’ co-authored by Mayank Kumar Singh, Naoya Takahashi, Onoe Naoyuki which has been accepted at the INTERSPEECH Conference 2023.

For demo samples, please refer to the website: https://demosamplesites.github.io/IterativeASR_VC/

Speech processing technologies such as voice conversion (VC) and automatic speech recognition (ASR) have dramatically improved in the past decade owing to the advancements in deep learning technologies. However, the task of training these models remains challenging on low resource domains as they suffer from over-fitting and do not generalize well for practical applications.

In this paper, we propose to iteratively improve a voice conversion model along with an automatic speech recognition model.

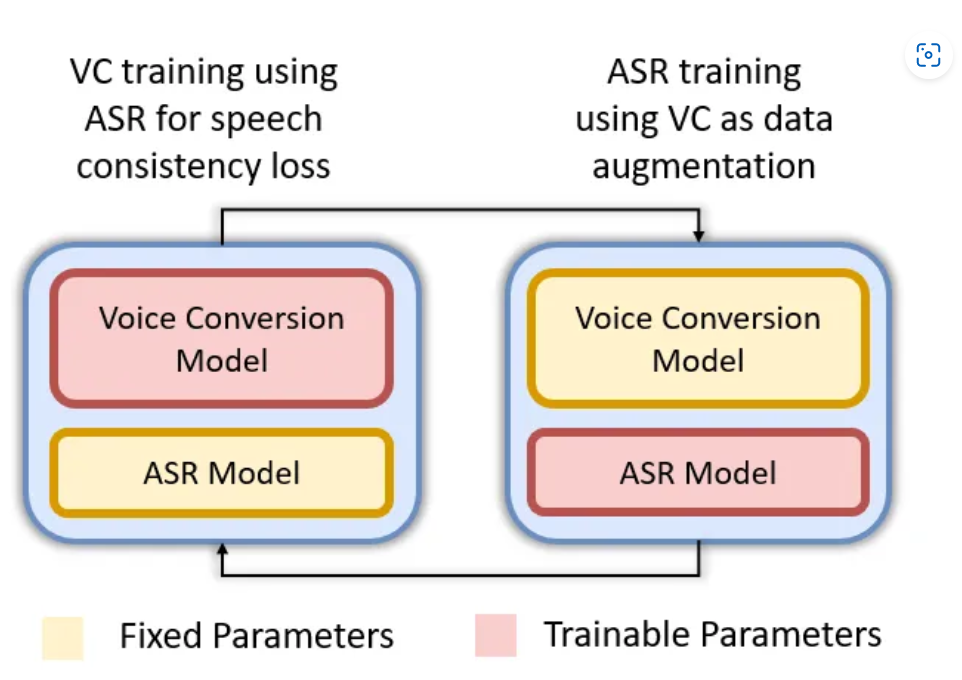

As VC models often rely on ASR model for extracting content features or imposing content consistency loss, degradation of ASR directly affects the quality of VC models. On the other hand, to improve the generalization capability of ASR model, a variety of data augmentation techniques have been proposed with voice conversion being one of them.

This creates a causality dilemma, wherein poor quality of ASR model affects VC model training which in turn leads to low quality data augmentation for training ASR models. Conversely, improving the ASR model should lead to better VC models, which should produce better data augmentation samples for improving the ASR models. Motivated from this, in this work we propose to iteratively improve the ASR model by using the VC model as a data augmentation method for training the ASR and simultaneously improve the VC model by using the ASR model for linguistic content preservation.

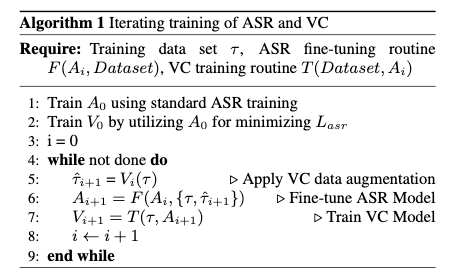

Algorithm 1: Iterative training of ASR and VC models

To know more about Sony Research India’s Research Publications, visit the ‘Publications’ section on our ‘Open Innovation’s page: